ASTERISK

A Simple Product Framework To Help Navigate Ethical AI Development

AI has arguably approached a tipping point into mainstream use. What was, for most of its history, a technology advanced through academia, is now being led by industry. In fact, prior to 2014, most significant machine learning models were released by academia. Since then, the landscape has changed drastically. In 2022, there were 32 significant industry-produced machine learning models with only 3 produced by academia1. The introduction of new and powerful technological advancements is pushing AI to become increasingly accessible to developers, suggesting that its application will only become more prevalent in our products and services. With these powerful technologies now being opened for use across industry, it's more important than ever to safeguard our users against any associated risks.

This article aims to introduce guiding principles for product builders looking to incorporate AI-centric solutions into their projects. For those who find that frameworks help them think effectively, these guidelines can be referred to as ASTERISK. They are shaped by personal experiences working in software development and product management across several AI teams and products. They are however by no means a catch-all solution and are not meant to be prescriptive in nature. Instead, they hope to promote ethical thinking around AI as a part of the product development process.

The framework has been designed to be intentionally high-level so that it can be used in a modular fashion across varying forms of AI-centric product development. It serves as a way to navigate ethical dilemmas faced during product development and assure that findings from each step inform the next for a positive and grounded product outcome. It will be put into use in this article, applied to generative AI, and in particular to large language models (LLMs).

The ASTERISK framework is as follows:

A: Assess Objectives

S: Sync Technology

T: Take Note Of Characteristics

E: Evaluate Risk

R: Recognize User Expertise

I: Inform On Limitations

S: Surface Insights

K: Keep Tracking

Let’s get into it.

A: Assess Objectives

As with any new product, we need to first start with assessing our objectives. In this example, we’ll assume that we’re working for a small startup that is looking to disrupt the wellness industry.

Mission:

Support the wellbeing of the world’s professionals so that they can create a better tomorrow.

Product:

A subscription-based chatbot that offers wellness suggestions.

Users:

Business professionals with minimal spare time in their daily routines.

Value:

Derived when users improve their day-to-day mood after speaking with the chatbot.

Objective:

Offer fast and trust-worthy wellness suggestions for business professionals experiencing a diverse range of challenges.

S: Sync Technology

Now that we have our objective in mind, we can next sync it to the appropriate technology. To help us evaluate which technology will best enable our desired objectives, we can take a holistic look at some of those which have been commonly used for chatbots in the past and evaluate their trade-offs. We’ll limit our comparison to two types of chatbots, selecting the one that best optimizes our prioritization criteria which we can consider to be implementation effort, positive impact to user value, and enablement of mission. Ranking each from 1-3 in ascending order of value.

Rule-Based:

These are systems that use a predefined set of rules and patterns when responding to user inputs. They generally follow a decision tree or flowchart-like structure.

Implementation effort: Scores 3 as they are fairly easy to design and build.

Impact on user value: Scores 1 as fixed response patterns will not offer much in terms of personalized wellness suggestions.

Enablement of mission: Scores 1 as limitations in context awareness may lead to superficial and incoherent dialogue among users seeking a supporting experience.

Generative AI-Based:

These are systems which generate responses from machine learning models, such as LLMs in our case, that are trained on large datasets.

Implementation effort: Scores 2 as several tools have been made available jumpstarting their development.

Impact on user value: Scores 3 as their general language understanding and generation capabilities can cater to and produce a wide range of personalized wellness suggestions.

Enablement of mission: Scores 3 as they excel at generating coherent and contextually relevant outputs which can offer a more supportive conversational experience.

A generative AI-based approach with LLMs scores higher for our criteria and so we decide to explore it for our use case.

T: Take Note Of Characteristics

When working with different forms of artificial intelligence, taking note of defining characteristics of the underlying technologies in clear and concise terms, will help build our understanding of them.

In this case, a LLM such as those found in popular applications like ChatGPT, generates responses by predicting which words or phrases are most likely to follow a given prompt based on the patterns and associations it learned from training data. For the sake of brevity in this exercise, the characteristics we’ll focus on will be concerning training, architecture, and language understanding.

Training: Trained in a self-supervised manner on vast amounts of textual data to learn the statistical patterns and semantic relationships within language.

Architecture: Deep learning architecture based on transformers consisting of multiple layers of self-attention mechanisms that allow for the capture of intricate patterns and dependencies in language.

Language understanding: Ability to generate human-like language, comprehend text, and generate coherent and contextually relevant responses.

These characteristics enable LLMs to effectively handle large-scale training data, process and capture patterns from extensive text corpora, and ultimately contribute to their ability to learn language patterns and structures.

As a note, our findings here will vary greatly depending on the type of machine learning we are leveraging. In comparison, if we were leveraging a naive Bayes model to build a spam classifier for example, we would have noted that this is a simple probabilistic classifier based on Bayes’ theorem, that the models are trained using a probabilistic approach that estimates conditional probabilities of features given class labels of the training data, and that they don’t have inherent language understanding capabilities. This would have consequently informed subsequent steps differently.

E:Evaluate Risk

We’ve gained an understanding of LLMs, and now we’re able to evaluate the risk they may pose to our users. Let’s think through an example of an ethical concern that may appear as a result of this technology. Of course, in practice, there may exist additional concerns that should be considered throughout this step.

As the patterns learned from our model’s training data are not intuitive to humans and in many cases are not completely understood even to experts in the AI field, the uncertainty in how our chatbot generates its wellness responses is a concern as they can prove to be inaccurate. Adding to this, these potentially inaccurate responses may be presented in an authoritative manner. Up until this point, our user interface in mind resembled that of any other simple chatbot and didn’t account for such a disconnect between response generation and presentation.

We’ve determined that the risk of inaccuracy in our product has not yet been sufficiently solved for. We aren’t empowering users to apply their best judgment and think critically about the information presented and therefore our evaluation suggests our risk remains quite high. We decide to move on further to the RISK portion of this framework.

R: Recognize User Expertise

Now that we’ve established our product may pose a risk to our users, recognizing the level of domain and technical expertise among our users can help effectively calibrate a risk mitigation strategy. Results from our first step, assessing objectives, can help inform our assessment of user expertise.

We know that our product will be a subscription-based chatbot that offers wellness suggestions to business professionals who have minimal spare time in their daily routines. Assuming they are not working in the healthcare space and that they have little tech expertise, we determine the following about our users:

Lack of health domain expertise:

This poses a challenge for them in assessing the validity of wellness suggestions.

Lack of AI domain expertise:

This poses a challenge for them in understanding the potential uncertainty of wellness suggestions offered.

Lack of advanced data literacy:

This poses a challenge for them to interpret any statistical explanations we may want them to reference.

We’ll keep these factors in mind as we develop mitigations going forward.

I: Inform On Limitations

Determining that our users have limited expertise, we understand that we are placing an expectation on them to effectively evaluate the responses generated by our generative AI powered solution. We can utilize the findings from our second step, syncing technology, to inform users on our product’s limitations.

Limitations outlined here may likely be general and applicable across more than one form of artificial intelligence. They serve mainly as a broad safety net notifying users of some of the wider potential gaps in our solution that they should be aware of.

We previously noted that generative AI, as the name suggests, generates responses from machine learning models which have been trained on large data sets. So, weakness in our wellness suggestions can stem from both the auto-generated responses themselves and the data they have been generated on. In our case, we aren’t verifying that the data they have been trained on and the generated response is factually correct which only amplifies these weaknesses.

Before moving on, it should be noted that we could, of course, decide here to instead opt to go a different route and leverage only trusted sources of empirical data or apply some fact-checking mechanism. We’ll instead assume though that the company feels this pivot would result in a trade-off that limits the breadth of questions we can help users with and decides against it.

Auto-generated responses:

We can explicitly make visible to users that wellness suggestions may be subject to inaccuracies. Simple disclaimers front and center of a product can help remind users that outputs are not a source of truth.

Large corpus of training data:

We can provide users with characteristics of the data that we’ve leveraged. This can include size, time range, frequency of updates, and authenticity of data sets.

Offering this transparency may introduce some friction early on, but users will appreciate that we’re considering their best interest in mind long-term.

S: Surface Insights

Exposing the limitations of our solution is only one approach at our disposal to increase awareness of the risks associated with our product. While new areas of research are just beginning to emerge around AI explainability and trustworthiness, and no approach is without its challenges and limitations, a thoughtful combination of techniques can go further and offer transparency into model decision making itself. Surfacing insights to users directly can equip them with the data they need to make educated decisions of their own.

Pulling from findings in our third step, taking note of characteristics, we can identify complex technical areas that may be in need of explainability insights.

We previously found some defining characteristics of LLMs to be self-supervised training, deep learning transformer based architectures, and generation of human-like language all contributing to responses that seem personal, coherent and relevant when in actuality may at times be factually incorrect. We’ll focus on offering transparency into the risk of inaccuracy from our model and its hidden complexity.

Inaccurate Outputs:

While explainability techniques alone will not help determine factual correctness, they can offer insights into the model's own confidence of responses in terms of predictions based on its training data.

Hidden Complexity:

Explainability techniques can offer insights into why the model decided what it did within its complex process.

For this exercise, let’s assume that a user has accessed our LLM powered chatbot interface and they are seeking ways to reduce stress. As there exists a growing number of available resources around explainability techniques, this example will reference only a small subset of techniques for evaluation.

User:

Best way to reduce my stress?Chatbot:

Take a break from work and relax.Confidence Scores

These explain the degree of certainty the model has in its prediction. They give an indication that a model’s output is the most likely response based on its training data.

Some common approaches to communicate this are by leveraging numerical and categorical values:

Numerical: A percentage of probability.

Categorical: Measure of confidence grouped and presented in language as opposed to numbers such as High / Medium / Low.

Let's put our example into practice.

We can use the output probabilities from the model to quantify the confidence in this response. Let's suppose that the model assigns the following probabilities to the top-k responses:

Response 1: Take a break from work and relax. (probability: 0.7)

Response 2: Exercise regularly. (probability: 0.2)

Response 3: Spend time with loved ones. (probability: 0.1)In this case, the model assigns a high probability (0.7) to the original response, indicating that it is the most likely response based on the model's training data. We can present this information to the user as follows:

User:

Best way to reduce my stress?Chatbot:

Take a break from work and relax. (probability: 0.7)By presenting users with numerical confidence scores, we can provide more detailed and precise information on the model's confidence in each response. However, because numerical scores may be more difficult for our non-technical users to interpret, we can instead look at categorical confidence scores. This would result in a response that resembles the following:

Chatbot:

Take a break from work and relax. (high confidence) It’s important to again emphasize that, although confidence scores can help us understand the certainty of a model’s prediction in relation to its training data, they are not directly helping us determine the factual accuracy of a response. In the case of our chatbot, these values may actually mislead our users, eroding their trust over time, and so we may choose not to surface them in this example.

Input Significance

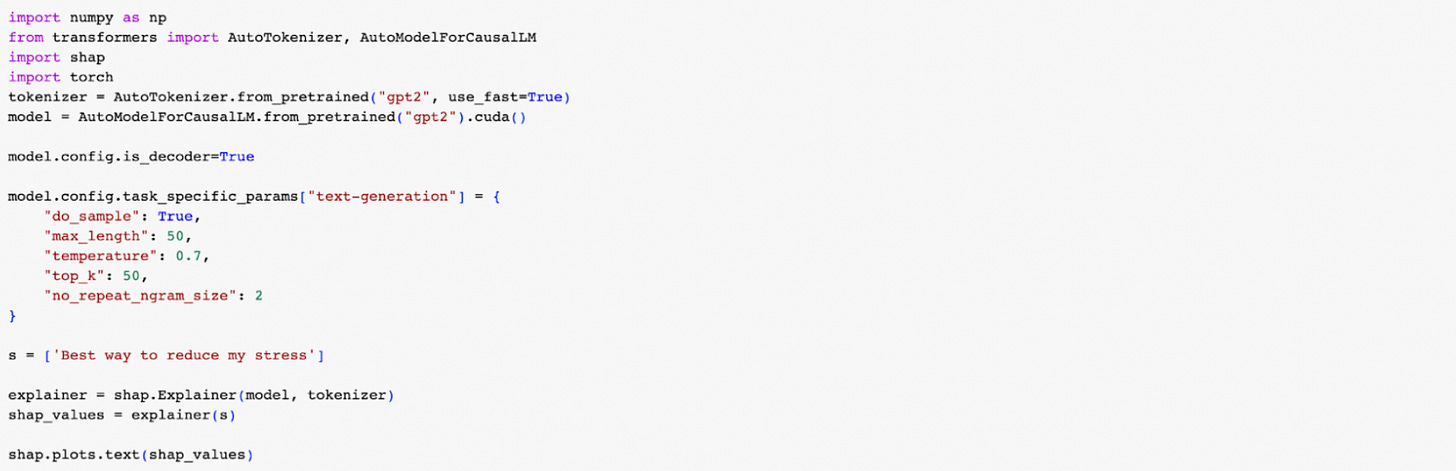

Tools like SHAP (Shapley Additive Explanations), can be used to identify which parts of the input text are most important in determining the model's response. This can be helpful to offer users transparency into how much the response they receive from our model could vary based on specific words contained in their input.

Let’s apply this to our example and show the user which parts of the input were most important in generating the response, allowing them to identify potential biases in the model's decision-making process.

User:

Best way to reduce my stress?Chatbot:

Take a break from work and relax.The output of the above code snippet, applied to the GPT2, is a SHAP value plot that displays how much each token in the input text contributed to the model's output.

In this plot, we can see which words, such as "stress" and “way", have positive SHAP values, indicating that they were important words in generating the model's response. Conversely, the word "best" has a negative SHAP value, indicating that it had a negative impact on the model's response. This makes sense, as the model's response did not include any specific recommendation for the "best" way to reduce stress.

Rather than confusing our users who may have difficulty interpreting such a plot, we could highlight key words in our user interface from their previously entered dialogue as shown below.

It’s worth noting here that these are only approximations and SHAP will not explain model behavior in its entirety. Additionally, SHAP diagrams can be complex, especially for LLMs that have a large number of parameters. Nonetheless, by using SHAP values to explain how a user’s input can alter the output of our LLM powered chatbot, we can provide users with more intuitive explanations concerning the model's responses.

K: Keep Tracking

Once we’ve confidently launched our product with relevant safety techniques embedded into the experience, we should keep tracking how well our mitigations are holding up. Concerns flagged in the risk evaluation step will help inform us on areas to keep in mind.

Inaccuracy of responses:

Number of user complaints: The number of reports that we receive mentioning something may be off with the responses.

Average rating of responses: An average value within some scale, such as 1-5, that users give after receiving a response.

Expert audits: Findings on the quality of responses stemming from our training data, fine-tuning process, and model outputs

Evaluation of responses:

User surveys and testing: Understanding of product limitations and interpretability of model explanations.

Over time, if we detect an uptick in complaints, low ratings, inaccurate findings, or poor survey and testing results, we should evaluate if modifications are needed to our objectives, chosen technologies, or implemented risk mitigations.

In Summary

As AI technologies become increasingly available and integrated into our products and services, a product framework can help navigate ethical AI development. The framework outlined in this article, ASTERISK, provides such a guide for product builders working on AI-centric applications. By assessing objectives, syncing technology, taking note of characteristics, evaluating risks, recognizing user expertise, informing users of limitations, surfacing insights, and keeping track, we can better incorporate the safety of our users as a pillar in an overall improved product experience.

The successful integration of AI technologies, such as generative AI, into our products depends on our ability to balance innovation with ethical considerations, user empowerment, and transparent communication. Through diligent implementation of best practices and guidelines, and ongoing collaboration between industry, academia, and regulatory bodies, we can shape a future where AI-driven products and services truly benefit and safeguard users.

Nestor Maslej, Loredana Fattorini, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons, James Manyika, Helen Ngo, Juan Carlos Niebles, Vanessa Parli, Yoav Shoham, Russell Wald, Jack Clark, and Raymond Perrault, “The AI Index 2023 Annual Report,” AI Index Steering Committee, Institute for Human-Centered AI, Stanford University, Stanford, CA, April 2023.

Hi, I just started here and was looking to people who care about AI ethics - because that is what I am writing about. What I found to be wrong in current LLM model - from lived experience, and long intense interactions. Trained for efficiency and alignment but not truth. I wrote 2 papers that address the issues and suggest fixes. Curious what you think! https://substack.com/@barbara952754?r=5q04qv&utm_medium=ios&utm_source=profile